About Me¶

|

|

Principal Data Scientist @ Monetate Labs • PyMC3 developer¶

@AustinRochford • austinrochford.com • github.com/AustinRochford¶

arochford@monetate.com • austin.rochford@gmail.com¶

About Monetate¶

- Founded 2008, web optimization and personalization SaaS

- Observed 5B impressions and $4.1B in revenue during Cyber Week 2017

Nontechnical marketer-focused¶

About this talk¶

Outline¶

- Web optimization

- A/B testing

- Multi-armed bandits

- Bayesian bandits

- Thompson sampling

- Nonstationarity

Kalman filters- Decayed posteriors

- Bandit bias

- Inverse propensity weighting

- Contextual bandits?

Web Optimization¶

A/B testing¶

A/B testing machinery¶

|

|

|

Multi-armed bandits¶

Multi-armed bandit systems¶

Bayesian Bandits¶

Beta-binomial model¶

$$ \begin{align*} x_A, x_B & = \textrm{number of rewards from users shown variant } A, B \\ x_A & \sim \textrm{Binomial}(n_A, r_A) \\ x_B & \sim \textrm{Binomial}(n_B, r_B) \\ r_A, r_B & \sim \textrm{Beta}(1, 1) \end{align*} $$

$$ \begin{align*} r_A\ |\ n_A, x_A & \sim \textrm{Beta}(x_A + 1, n_A - x_A + 1) \\ r_B\ |\ n_B, x_B & \sim \textrm{Beta}(x_B + 1, n_B - x_B + 1) \end{align*} $$

Thompson sampling¶

Thompson sampling randomizes user/variant assignment according to the probabilty that each variant maximizes the posterior expected reward.

The probability that a user is assigned variant A is

$$ \begin{align*} P(r_A > r_B\ |\ \mathcal{D}) & = \int_0^1 P(r_A > r\ |\ \mathcal{D})\ \pi_B(r\ |\ \mathcal{D})\ dr \\ & = \int_0^1 \left(\int_r^1 \pi_A(s\ |\ \mathcal{D})\ ds\right)\ \pi_B(r\ |\ \mathcal{D})\ dr \\ & \propto \int_0^1 \left(\int_r^1 s^{\alpha_A - 1} (1 - s)^{\beta_A - 1}\ ds\right) r^{\alpha_B - 1} (1 - r)^{\beta_B - 1}\ dr \end{align*} $$

fig

fig

(a_samples > b_samples).mean()

0.24299999999999999

Thompson sampling, redeemed¶

- Sample $\hat{r}_A \sim r_A\ |\ n_A, x_A$ and $\hat{r}_B \sim r_B\ |\ n_B, x_B$.

- Assign the user variant A if $\hat{r}_A > \hat{r}_B$, otherwise assign them variant B.

Simulating a bandit¶

class BetaBinomial:

def __init__(self, a0=1., b0=1.):

self.a = a0

self.b = b0

def sample(self):

return sp.stats.beta.rvs(self.a, self.b)

def update(self, n, x):

self.a += x

self.b += n - x

class Bandit:

def __init__(self, a_post, b_post):

self.a_post = a_post

self.b_post = b_post

def assign(self):

return 1 * (self.a_post.sample() < self.b_post.sample())

def update(self, arm, reward):

arm_post = self.a_post if arm == 0 else self.b_post

arm_post.update(1, reward)

A_RATE, B_RATE = 0.05, 0.1

N = 1000

rewards_gen = generate_rewards(A_RATE, B_RATE, N)

bandit = Bandit(BetaBinomial(), BetaBinomial())

arms = np.empty(N, dtype=np.int64)

rewards = np.empty(N)

for t, arm_rewards in tqdm(enumerate(rewards_gen), total=N):

arms[t] = bandit.assign()

rewards[t] = arm_rewards[arms[t]]

bandit.update(arms[t], rewards[t])

100%|██████████| 1000/1000 [00:00<00:00, 6266.30it/s]

fig

Simulating many bandits¶

N_BANDIT = 100

arms = np.empty((N_BANDIT, N), dtype=np.int64)

rewards = np.empty((N_BANDIT, N))

for i in trange(N_BANDIT):

arms[i], rewards[i] = simulate_bandit(

generate_rewards(A_RATE, B_RATE, N), N

)

100%|██████████| 100/100 [00:13<00:00, 7.36it/s]

fig

A/A testing¶

A/A bandits¶

N = 2000

arms, rewards = simulate_bandits(

lambda: generate_rewards(A_RATE, A_RATE, N),

N, N_BANDIT

)

100%|██████████| 100/100 [00:23<00:00, 4.18it/s]

fig

fig

Why Bayesian?¶

- Thompson sampling is intuitive and simple to implement

- Principled randomization

- Robustness against delayed feedback

- Marketers are natural Bayesians!

Nonstationarity¶

fig

Kalman filters¶

Decayed posterior¶

For $0 < \varepsilon \ll 1$

$$\color{purple}{\underbrace{\tilde{\pi}_{t + 1}(\theta\ |\ \mathcal{D}_{t + 1}, \mathcal{D}_{1, t})}_{\textrm{decayed posterior after time } t + 1}} \propto \left(\color{blue}{f(\mathcal{D}_{t + 1}\ |\ \theta)} \cdot \tilde{\color{red}{\pi}}_{\color{red}{t}}\color{red}{(\theta\ |\ \mathcal{D}_{1,t})}\right)^{1 - \varepsilon} \cdot \color{green}{\underbrace{\pi_0(\theta)}_{\textrm{no-data prior}}}^{\varepsilon}$$

If we stop collecting data at time $T$,

$$\color{purple}{\tilde{\pi}_{t}(\theta\ |\ \mathcal{D}_{1, T})} \overset{t \to \infty}{\longrightarrow} \color{green}{\pi_0(\theta)}.$$

Decayed beta-Binomial¶

If

$$ \begin{align*} \color{green}{\pi_0(r)} & = \textrm{Beta}(\alpha_0, \beta_0) \\ \color{purple}{\tilde{\pi}_t(r\ |\ \mathcal{D}_{1, t})} & = \textrm{Beta}(\alpha_t, \beta_t) \end{align*} $$

and no data is observed at time $t + 1$, then

$$\color{purple}{\tilde{\pi}_{t + 1}(r\ |\ \mathcal{D}_{t + 1} = \emptyset, \mathcal{D}_{1, t})} = \textrm{Beta}((1 - \varepsilon) \alpha_t + \varepsilon \alpha_0, (1 - \varepsilon) \beta_t + \varepsilon \beta_0).$$

fig

class DecayedBetaBinomial(BetaBinomial):

def __init__(self, eps, a0=1., b0=1.):

super(DecayedBetaBinomial, self).__init__(a0=a0, b0=b0)

self.eps = eps

self.a0 = a0

self.b0 = b0

def update(self, n, x):

super(DecayedBetaBinomial, self).update(n, x)

self.a = (1 - self.eps) * self.a + self.eps * self.a0

self.b = (1 - self.eps) * self.b + self.eps * self.b0

fig

arms, rewards = simulate_bandits(

lambda: generate_changing_rewards(A_RATES, B_RATES, N),

N, N_BANDIT,

)

decay_arms, decay_rewards = simulate_bandits(

lambda: generate_changing_rewards(A_RATES, B_RATES, N),

N, N_BANDIT,

prior=lambda: DecayedBetaBinomial(0.01)

)

100%|██████████| 100/100 [00:23<00:00, 4.18it/s] 100%|██████████| 100/100 [00:24<00:00, 4.02it/s]

fig

Bandit Bias¶

fig

fig

fig

Inverse propensity weighting¶

$$ \hat{r}^{\textrm{IPS}}_A = \frac{1}{n} \sum_{t = 1}^n \frac{Y_i \cdot \mathbb{I}(A_t = 1)}{P(A_t = 1\ |\ \mathcal{D}_{1, t - 1})} $$

where

$$ A_t = \begin{cases} 1 & \textrm{session } t \textrm{ saw variant A} \\ 0 & \textrm{session } t \textrm{ saw variant B} \end{cases}. $$

Calculating $P(A_t = 1\ |\ \mathcal{D}_{1, t - 1})$

ts_fig

%%time

N_MC = 500

a_samples = sp.stats.beta.rvs(

a_post_alpha[..., np.newaxis],

a_post_beta[..., np.newaxis],

size=(N_BANDIT, N, N_MC)

)

b_samples = sp.stats.beta.rvs(

b_post_alpha[..., np.newaxis],

b_post_beta[..., np.newaxis],

size=(N_BANDIT, N, N_MC)

)

a_prob = np.empty((N_BANDIT, N))

a_prob[:, 0] = 0.5

a_prob[:, 1:] = (a_samples > b_samples).mean(axis=2)[:, :-1]

CPU times: user 21.2 s, sys: 650 ms, total: 21.9 s Wall time: 21.9 s

a_ips_est = 1. / plot_t * (rewards * (arms == 0) / a_prob_).cumsum(axis=1)

b_ips_est = 1. / plot_t * (rewards * (arms == 1) / (1 - a_prob_)).cumsum(axis=1)

fig

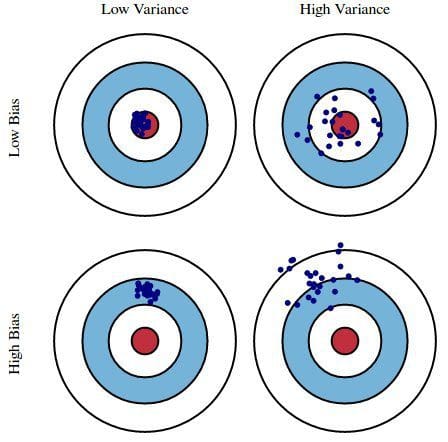

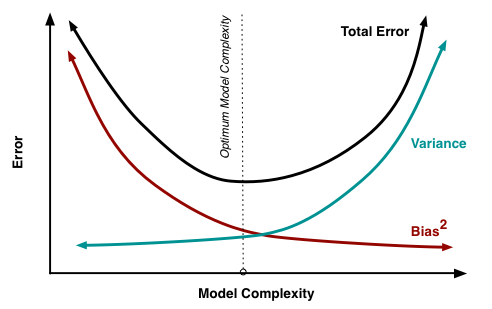

Bias-variance tradeoff¶

fig

Intuition: the variant with the highest reward rate should receive the most traffic.

fig